Research

Research

The developing nervous system finds clever solutions to everyday perception and action problems, but when and how these solutions are learned is not clear. For example, while adults perceiving and acting under uncertainty often approach an ideal statistical decision-maker, my recent studies show striking immaturities in this late into childhood – in perception, action, and neural representations.

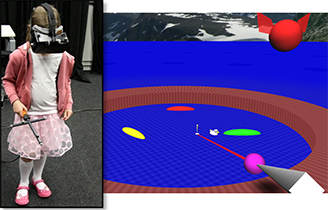

My current research asks how we are able to learn adaptive perception and action in complex environments. This includes training “new senses” in virtual reality, and comparing behaviour and learning with mathematical model predictions. I am also interested in the development of spatial cognition, and in applying new technology to clinical problems (e.g. sensory augmentation, infant visual assessment).

Current lab membersDr Meike Scheller – Post-doc Dr Chris Allen – Post-doc Melissa Ramsay – Research Assistant Olaf Kristiansen – PhD student * Join us * | Past lab membersDurham: Dr Reneta Kiryakova • Dr Becca Wedge Roberts • Dr James Negen • Laura Bird • Dr Hannah Roome • Brittney Chere UCL: Dr Tessa Dekker • Dr Pete Jones • Dr Karin Petrini • Dr Eliza Burton • Dr Sara Garcia • Dr Louisa Kulke • Sarah Kalwarowsky • Rachel Fahy • Alicia Remark • Jennifer Bales |

Lab resources

We have a large motion-tracked lab equipped for motion capture (using Vicon) and real-time immersive VR (using Oculus and Vizard). Other resources in the dept include facilities for multisensory psychophysics, EEG, eye-tracking and fMRI.

Some current and recent research projects

1. Learning cue combination

How do we learn to perceive and act efficiently under uncertainty? Why does it take children such a long time to do so? One model system we have been using to investigate this question is echolocation – training people with a “new” sense (or a new sensory skill). Are these new sensory estimates immediately combined with other (e.g. visual) estimates to reduce uncertainty, or are there barriers to this (e.g. correctly representing reliability, having biased estimates from the new sense)? (Paper: initial study) This work can shed light on what happens during development, when children are first learning to use and combine senses, and can inform sensory substitution strategies for children and adults with sensory loss. Work in collaboration with Lore Thaler, funded by ESRC.

2. Perception with new sensory signals

A much broader project on the potential to learn new sensory skills enabled by technology has started in 2019, funded for 5 years by a EUR 2m Consolidator Grant, NewSense, from the European Research Council. Our central aim is to understand the underlying mechanisms and potential for plasticity in the learning of new or augmented signals. We are continuing this work with the model system of depth perception (see above) as well as other extensions e.g. into material perception and motor tasks. Beyond the extent to which a skill is or is not deployed successfully, our key predictions are about the extent to which its use parallels what we know about more standard or “native” perception. These include its participation in standard multisensory interactions (e.g. weighted cue combination), the relative “automaticity” with which it is used, and the extent to which low-level sensory brain areas represent and compute with the new signal.

3. Learning to compute visual depth

Humans employ a range of strategies to compute depth from retinal projections. One strategy is to “fuse” multiple depth estimates (e.g. from perspective, motion, stereopsis), which reduces uncertainty. We have shown that unlike adults, children below 10 years still retain access to single depth cues, and do not show fusion of these cues in visual cortex. Another strategy is use of prior knowledge (e.g. about light direction, to interpret shading). We find that this too is still developing to age 10 years or later. These studies show that it takes a strikingly long time to learn to compute visual depth. We are interested in which processes of learning or maturation underlie development of these abilities, and how these differ in atypical development. Work in collaboration with Tessa Dekker, Andrew Welchman, and Denis Mareschal, funded by ESRC and the Swire Trust.

4. Development of spatial cognition

Different organisms display a wide variety of solutions to spatial problems – from computationally simple “view matching” strategies to internal models of spatial layouts (“cognitive maps”). Human development suggests a progression towards abilities to use flexible internal models, but how and when these are learned is still unclear. Immersive virtual reality lets us distinguish between different representations of space in novel ways, and to investigate the roles of visual cues known to play specific roles in neural coding of space (e.g. boundaries). Funded by the James S McDonnell Foundation and ESRC.